How Salesforce Data Cloud Fuels Fast, Accurate, Trusted AI Agents

Nearly 60% of the company's top 100 deals in Q1 FY26 included both Data Cloud and AI

Welcome to the Cloud Database Report. I’m John Foley, a long-time tech journalist, including 18 years at InformationWeek, who also worked in strategic comms at Oracle, IBM, and MongoDB. Connect with me on LinkedIn. This post is sponsored by Salesforce. The views and analysis are my own.

AI requires data, but bits and bytes aren’t enough. The data must be integrated, clean, complete, accurate, consistent, well-governed, timely, and trusted. Then it must be indexed, secure, and actionable.

For all that, you need a modern data foundation. You need a data cloud.

I first wrote about data clouds in the Cloud Database Report four years ago in an article titled “What is a Data Cloud?” At the time, data clouds were an emerging model for data ingress, egress, and sharing. Here’s what I said:

“What data clouds have in common is an all-encompassing data architecture and a business objective to put more data and insights into the hands of more people….Essential components include data integration and replication, data engineering, sharing, and analytics, all overlaid with end-to-end security and governance.”

That definition still holds together, but the world has changed. We now have GenAI, agentic AI, AGI, and superintelligence. Data clouds have had to adapt to enable this next-level AI.

Salesforce gets this. The company has hitched its agentic AI platform, Agentforce, to its Data Cloud with capabilities such as AI modeling, zero copy integration, and governance that are essential to enterprise AI.

Now, a growing number of its customers are using these technologies together. In Q1 of Fiscal 2026, nearly 60% of Salesforce’s top 100 deals included both Data Cloud and AI. That led to an impressive outcome: Data Cloud and AI annual recurring revenue increased 120% year over year, surpassing $1 billion.

Data Cloud may not enjoy the same level of buzz as the hyperscale clouds, but the word is getting around. “We are still working really to be able to kind of communicate with every single one of our customers on the importance of Data Cloud,” said CEO Marc Benioff on the company’s Q1 earnings call. “And yet Data Cloud just remains this incredibly fast-moving product.”

Fast-moving, yes—and gaining new capabilities so quickly it can be hard to keep up. So I reached out to two of the Salesforce leaders who have a hand in Data Cloud’s success—Rahul Auradkar, EVP & GM of Unified Data Services, and Muralidhar Krishnaprasad, President and CTO of Unified Agentforce Platform—to get a better understanding of what’s new and what’s next.

The elusive single source of truth

One of the first things you will hear when talking about Data Cloud and AI is the need to harmonize data, sometimes from hundreds of sources, and to unify disparate data records into a single source of the truth for activation across sales, service, marketing, commerce, and other customer engagement scenarios.

I shake my head when I hear terms like “single source of the truth” and “360-degree view of the customer” because, as a tech journalist who has covered business intelligence for more than 25 years, I know that this has long been the goal, but seldom reached. However, based on the demos and real-world use cases I’ve seen, Salesforce may finally be delivering on that promise.

Krishnaprasad says Salesforce learned these lessons the hard way—by unifying years of its own customer records. The company once believed it had 200 million customers, but after de-duplicating the IDs, the real number was half of that. “So when we talk about data accuracy, it starts with really understanding the customer,” he says. The payoff is that when customer records have been consolidated and normalized, “I now know more about that person,” says Krishnaprasad.

Of course, AI agents need this kind of timely, unified, accurate data. Because agents are capable of taking actions autonomously—including interacting with customers—there’s no margin for errors, bias, hallucinations, or other unpredictable behaviors that could be caused by unreliable data.

“Since agents promise the value of automation that augments humans, there is an expectation that there is a higher degree of relevance and precision that agents are drawing from this unified data,” explains Auradkar. “There is a higher bar.”

Open and extensible

In simple terms, Salesforce Data Cloud uses a lakehouse architecture that runs on AWS or Google Cloud. Salesforce lets customers choose the cloud infrastructure through its Hyperforce public-cloud offering. Data Cloud is open and extensible, built on open standards such as Iceberg (a data table format) and Parquet (a file format for data storage), which can be accessed from open and popular engines like Apache Spark, Google BigQuery, Snowflake, and others.

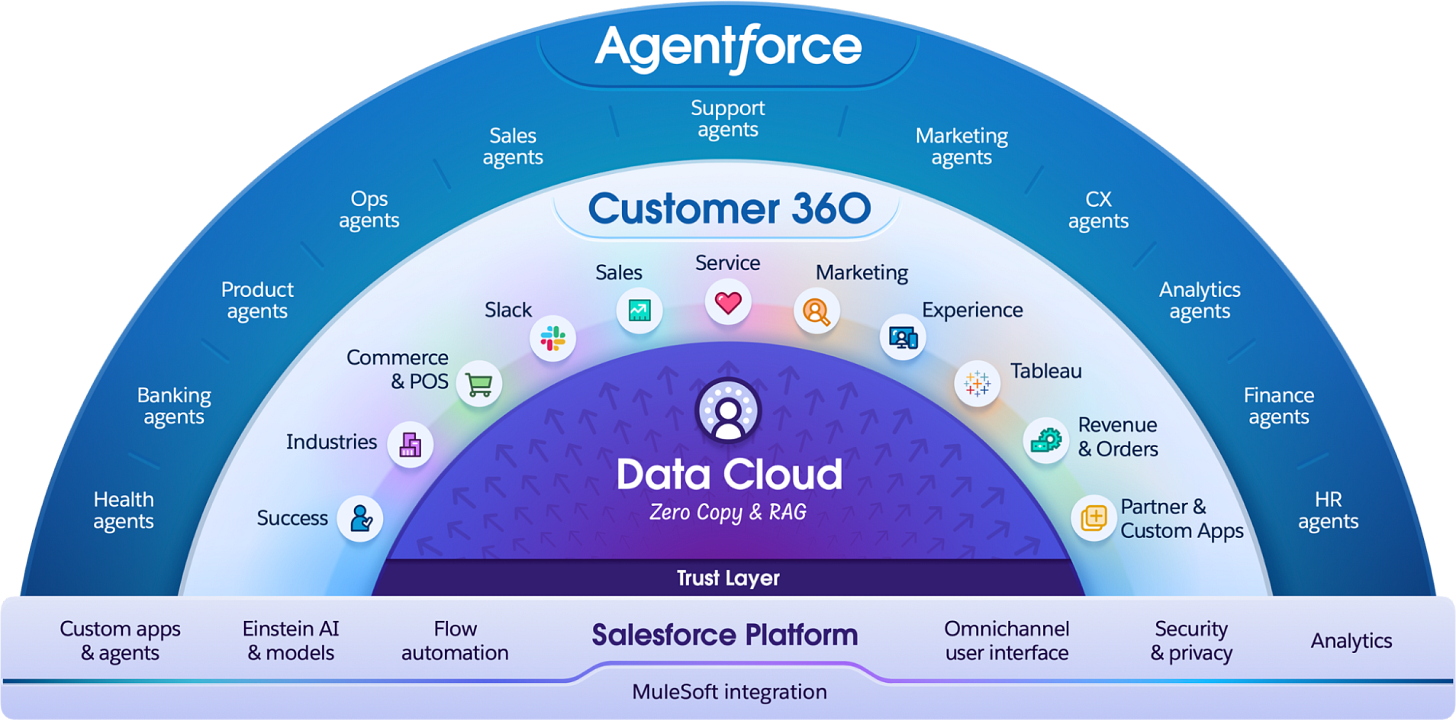

As you can see from the diagram above, Data Cloud also serves as the “binding layer” to Salesforce’s Customer 360 apps, says Auradkar. Customer 360 now includes pre-built Agentforce agents, making data connectivity seamless.

Data Cloud is sometimes called a Customer Data Platform. In fact, Salesforce is in Gartner’s Leaders quadrant for CDPs. However, given the tightening alignment of data and AI, I think it may be time for a new term: Agentic Data Platform. Salesforce is positioning Data Cloud to be one of the major building blocks of an agentic enterprise.

The business case for Data Cloud has been made stronger by the big idea of digital labor and a limitless workforce, made possible by legions of AI agents that work independently or augment the tasks of human workers. Salesforce has been adding new capabilities to enable this kind of hybrid worker environment. For example, a semantic data model lets agents and humans use data consistently. And a session-tracing data model in Data Cloud captures agent activity for analysis and refinement.

Data fluidity vs. gravity

When I first talked to Auradkar last fall, our conversation was largely about the ways Data Cloud frees up “trapped data” in corporate databases and apps. Salesforce has invested heavily in data connectivity, including Mulesoft APIs and Connectors and most recently MCP servers.

One of Data Cloud’s biggest innovations is Zero Copy, which replaces traditional data replication and extract/transform/load (ETL) processes with the ability to access data where it resides. This includes leading data platforms such as Snowflake, AWS Redshift, Google BigQuery, and Databricks.

The beauty of this approach is that it enables data access without copying, moving, or reformatting, providing near-real-time data to the reasoning engine and agents. Next, Salesforce is introducing a Zero Copy Framework, so partners can create their own Zero Copy connectors.

These myriad integration points allow for what Salesforce calls “data fluidity”—fast and seamless integration. That’s critical to the performance of AI agents, which must be able to respond in seconds or milliseconds. Case in point: Salesforce’s “eat your own dog food” internal use case—an Agentforce customer support agent on Help.salesforce.com—ingests data from hundreds of sources. As a proof point of its effectiveness, case volume grew by 7% after agents were introduced, while human intervention by support staff declined 7%.

With data that’s been harmonized from many sources in this way, “agents become that much more powerful,” says Auradkar.

To its credit, Salesforce is not looking to displace the enterprise data warehouses and other legacy data systems that represent huge investments for some organizations. “We’re not suggesting that Data Cloud will replace all of those silos,” says Auradkar. “They are there for good reason.”

What’s new and next

In the ten months since Agentforce was launched at Dreamforce 2024, Salesforce has been busy adding a wide range of other new technologies in Data Cloud. Among the new developments:

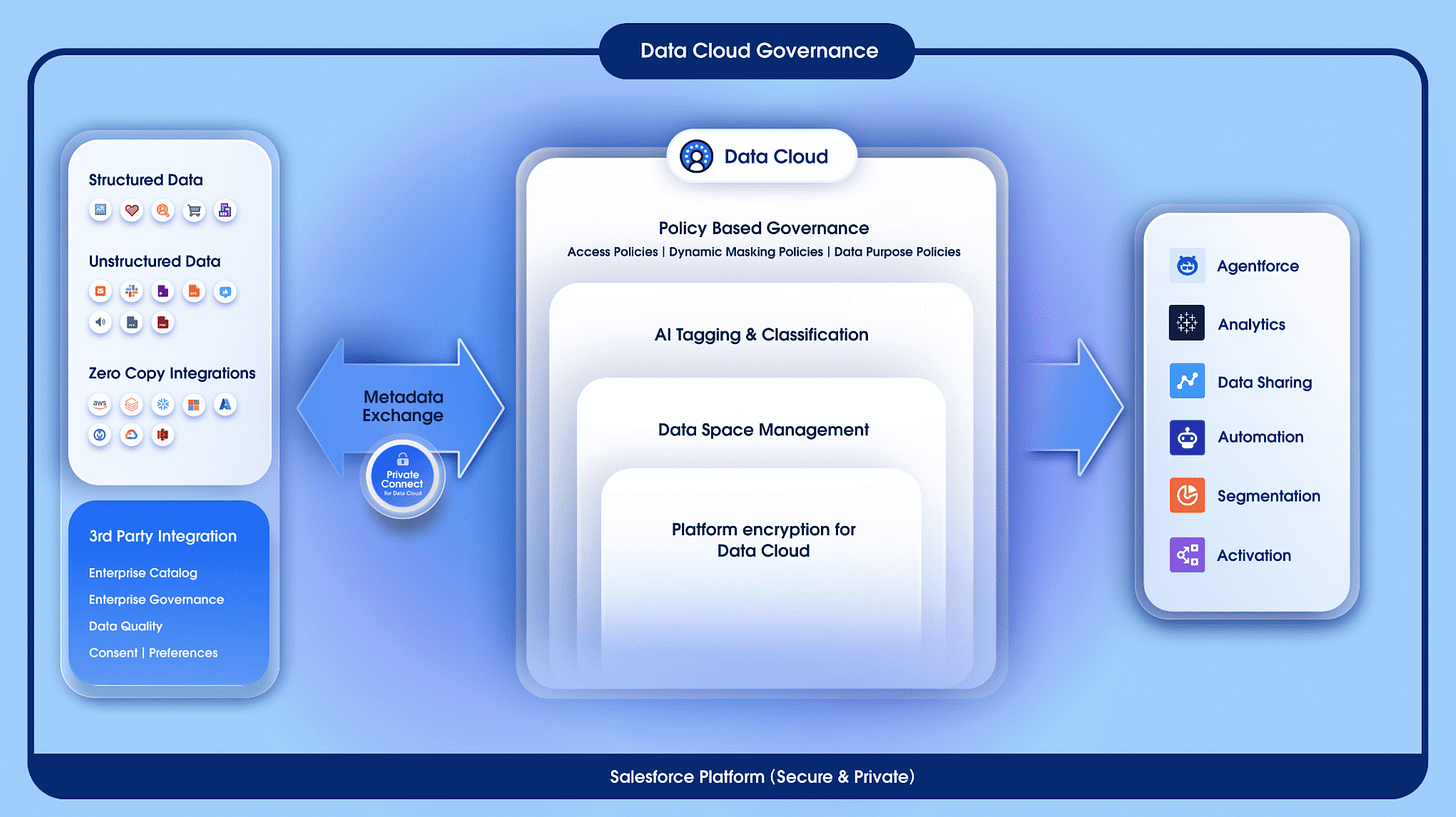

Data Governance - There’s a growing set of capabilities for policy and access management and other ways of establishing trusted AI. (See chart above.) For example, customers can establish private, direct connections with third-party data sources. Tagging and classification have been automated, while dynamic data masking hides sensitive info. With such automation, says Auradkar, “you can enforce policy at scale.”

Data Activations - Data can be activated in apps, analytics, and agents. These might be a recommended action in a marketing campaign, a product offering, or an insight shared by an agent with users in Slack. And, activations work across apps, email, text, WhatsApp, and other surfaces.

Bring your own models and code - In keeping with Salesforce’s open philosophy, customers can bring in their own large language models and model builders, such as Amazon SageMaker or Google’s Vertex AI. BYOM, as it’s called, is similar to Zero Copy in the way it lets customers manage data and build models on their platforms of choice without having to lift and shift. On a related note, the company recently introduced Bring Your Own Code, allowing users to execute custom Python code in Data Cloud.

Predictive AI with Einstein Studio – Users can create their own predictive AI models, import predictions from third-party models, and connect them to LLMs such as OpenAI or Azure OpenAI. It’s like a gateway to GenAI, says Auradkar. “You want agents to know something based on predictions,” he explains.

There’s more in the development pipeline. Stay tuned for agents that autonomously perform tasks in Data Cloud such as data harmonization and data segmentation. Also, Salesforce recently gave its first peek at clean rooms, where customers will be able to collaborate on data in a secure, privacy-safe environment.

Demo: agents & humans collaborating

What does it look like when humans and agents work together in a tag team-like collaboration and share data? Krishnaprasad walked me through it.

Here’s the demo: A person with Type 2 diabetes visits a healthcare provider’s website. An AI agent is the person’s first point of contact. To confirm the patient’s health insurance information, the agent accesses data from the Snowflake cloud using Zero Copy.

Next, the patient asks a lifestyle question. The agent pulls a response from the healthcare provider’s document archive, utilizing vector indexing and keyword indexing to gather information and provide a personalized response. It includes both structured data from the patient’s profile and unstructured data from the document store.

Further, the patient asks for a physician referral, prompting the agent to automatically share a lead referral to Slack. That’s followed by a user query about medical devices.

Now things get more interesting. The patient inquires about medical trials, and the agent knows to escalate this type of question to a human representative. The human rep has access to the transcript of the patient/agent interaction, yet when the human rep sees the patient profile, private information has been masked due to automated access controls.

Finally, the patient asks if they qualify for the diabetes trial. The human rep, using information provided by the agent, determines that the patient does qualify.

“In these sensitive situations, you want a human in the picture,” says Krishnaprasad. “This is a classic example of where the agent can assist the human.”

Building blocks for agents at scale

Salesforce frames its broad strategy as comprising agents, data, apps, and metadata. Metadata is hugely important because, in addition to providing a common layer of meaning to data, it defines the workflows and automations with so much data being integrated and activated.

Going forward, a major question for Salesforce and its customers is this: How do today’s thousands of entry-level AI agent projects eventually scale to enterprise-wide deployments?

Salesforce has a growing portfolio of products and capabilities to help with that. They include Agentforce Test Center, Developer Edition, Command Center, and Omni Supervisor, which provides a unified view into the work being done by humans and agents.

Assuming no regulatory roadblocks, Salesforce’s pending $8 billion acquisition of Informatica may provide another injection of enterprise-class data management capabilities. Without commenting on the deal itself or any product planning, Auradkar noted that metadata cataloging, master data management, data lineage, and policy management—all areas where Informatica plays—are capabilities that are important to Salesforce customers.

Having attended Informatica World earlier this year, I will add that Informatica showed prototype AI agents for data management. In the demo, the agents collected data from Oracle and SAP apps, automatically cataloged the data, performed a quality check on the staged data, then created and applied data-quality rules to increase the quality score on the data set. It was an impressive display of automation possibilities yet to come.

Even with all of this progress, my expectation is that data management for AI will continue to be a challenge for the foreseeable future because there will be more data, more integrations, more agents, and rising expectations. Yet, if AI works as intended, it will increasingly do much of the heavy lifting.