Good AI vs. Bad Data: The Fight to Get It Right

At Informatica World, AI gets a data-quality litmus test, with mixed results

Welcome everyone! I’m John Foley, a long-time tech journalist who has also worked in strategic comms at Oracle, IBM, and MongoDB. Now I’m an independent tech writer. I attended Informatica World on assignment for Method Communications.

TLDR:

AI projects require good, clean, trusted data

Many teams lack the technologies and processes to generate AI-ready data

Data quality is the #1 issue, according to one survey

Some companies are overcoming the challenges — here’s how

The risks of inaccurate data

Agentic AI is coming fast — but enterprise data isn’t ready.

That’s the opportunity businesses now face, and the hairball of challenges that comes with it. For AI to be trusted, business data must be timely, accurate, complete, consistent, reliable. And there may be consequences for those that don’t get it right: bias, hallucinations, breaches, rogue agents, lost customers, even “poisoned data.”

“If GenAI fails, we see potential operational failure,” said Jason du Preez, Informatica VP and an expert in data privacy and safety at Informatica World last week in Las Vegas. “The blast radius is much wider.” That also applies to agentic AI, where autonomous agents are capable of reasoning and taking action. You can imagine nightmares of what they might do with faulty data.

This dichotomy — good AI at risk from bad data — is where the battle is being fought in many businesses. The Informatica world theme, “Ready, Set, AI,” is where everyone aspires to be. But many still have data cleanup to do before they get there.

Here’s the situation: “94% of companies recognize that the data they collect and store is not entirely accurate,” according to KPMG.

And, “63% of organizations either do not have or are unsure if they have the right data management practices for AI,” according to Gartner.

These are the head-rattling speed bumps on the road to generative and agentic AI. Gartner warns: “Organizations that fail to realize the vast differences between AI-ready data requirements and traditional data management will endanger the success of their AI efforts.”

We shouldn’t be surprised. Data readiness is not, and never has been, easy. The phrase “garbage in, garbage out” is ‘50s era. Some CIOs have been sorting through the junk piles their entire careers.

I arrived at Informatica World with a few questions:

Why, after so many years of prepping and cleansing, is data still not AI-ready?

Are there signs of improvement?

What steps can IT teams take now to prepare for GenAI and agentic AI?

What bad things happen when you don't have good data?

The encouraging news is that, with best practices and technologies, you can get to the necessary state of data readiness. However, the pace of change and complexity of today’s data landscape mean that data for AI isn’t about to get any easier, even for organizations that are built for it.

Getting from here to there

Given the buzz, you might think enterprise AI is further along than it is. However, the evidence suggests many or even most of these efforts are still in startup mode — dev/test, proof of concept, not ready for prime time. One recent study found that “many organizations struggle to bridge the gap between experimentation and enterprise-wide deployment.”

Even Informatica CEO Amit Walia — who brims with enthusiasm for data-driven AI strategies — said in his opening keynote, “A lot of those projects are not there in terms of completion.”

How do businesses get from here (low-quality data) to there (high-quality AI)?

There’s no shortage of tools and technologies. Informatica introduced its first three AI agents — a Data Quality Agent, Data Discovery Agent, and Data Ingestion Agent — which are designed to automate some of the tedious work that goes into building and maintaining data pipelines. Customers will get their first look at those agents in the second half of this year.

These newly emerging autonomous agents promise to be part of the long-term solution because they can discover, ingest, integrate, catalog, and clean up data, which are what’s required to make data AI-ready. Informatica is starting with about 10 agents, but Walia hints there could be many more.

In an impressive demo of how these agents will eventually be able to streamline data workflows, Informatica showed what it calls AI Agent Engineering in a supply-chain scenario. The agents collected data from Oracle and SAP apps, automatically cataloged the data, performed a quality check on the staged data, then created and applied data-quality rules to increase the quality score on the data set.

It’s the kind of intelligent automation that may one day let data engineers and other practitioners catch up to and even get ahead of the data management challenges they currently face.

Museum of legacy tech

There are many reasons that data engineers and architects struggle with quality. I put complexity — data complexity and infrastructure complexity — at the top of the list.

Enterprise data, by its nature, keeps growing in volume (terabytes, petabytes, exabytes), and new data types such as vectors, multimodal, and synthetic keep coming along. See my post below.

“The companies that solve data complexity are the ones that can do AI exceptionally well,” Krish Vitaldevara, Informatica EVP and Chief Product Officer, told me in an interview.

Data infrastructure is the other half of the complexity challenge. Many orgs must renovate their tech stack to build and implement in-house GenAI and agentic AI. The new components might include vector databases, RAG, agent builders, MCP, A2A, model tools, frameworks, etc. The best way to keep up, advises Vitaldevara, is to “innovate into the platforms you already use.”

Informatica has developed GenAI blueprints, i.e. architectural guidelines, with six major partners — AWS, Databricks, Google Cloud, Microsoft, Oracle, and Snowflake — to help customers stitch it all together. And last week it announced new partnerships with Salesforce and Nvidia.

That’s excellent company to be in, but plug-and-play architectures are never as easy as they look in a slide deck. And a lot of data is siloed away in legacy systems.

“Of course everybody wants AI today,” said Richard Ganley, Informatica SVP of Global Partners. “But they have a museum of technology they need to modernize.”

In a presentation on AI architecture, Kevin Petrie, VP of Research with analyst firm BARC, said the “must haves” of modern architecture include the ability to integrate old and new technologies, modular design, open APIs/formats, and AI-assisted data tasks.

The trick is to do that with proficiency and dare I say elegance. “The fear I have is it will become more complex,” admitted John Abel, CIO at Proofpoint, a cybersecurity SaaS provider and Informatica customer.

Simply put, there’s a footrace underway between complexity and complexity-solving solutions. So far, complexity is ahead.

The #1 obstacle

I asked Kevin Petrie why data is still not AI-ready after so long and for so many. “Organizations have struggled with data quality for decades,” he responded. “A primary challenge is keeping up with the proliferation of data sources, platforms, tools, devices, and users. This sprawl leads to duplicative, contradictory data that prevent a single source of truth.”

In a BARC survey of people with experience in data & AI, data quality/lineage was the most-cited challenge and obstacle, identified by 45% of respondents.

The other challenges/obstacles, in order, were data privacy and regulatory concerns (43%); lack of AI skills (43%); incompatible tools or systems (27%); and data access/availability (27%). The survey was sponsored by Informatica and other vendors.

Hope isn’t lost

Counterbalancing the hard realities were case studies of companies that have their data and AI houses in good order. Tech leaders from Citizens Bank, Royal Caribbean, Indeed, Gilead, Liberty Mutual, JetBlue, Nutanix, and Sutter Health were among those sharing lessons learned and next steps.

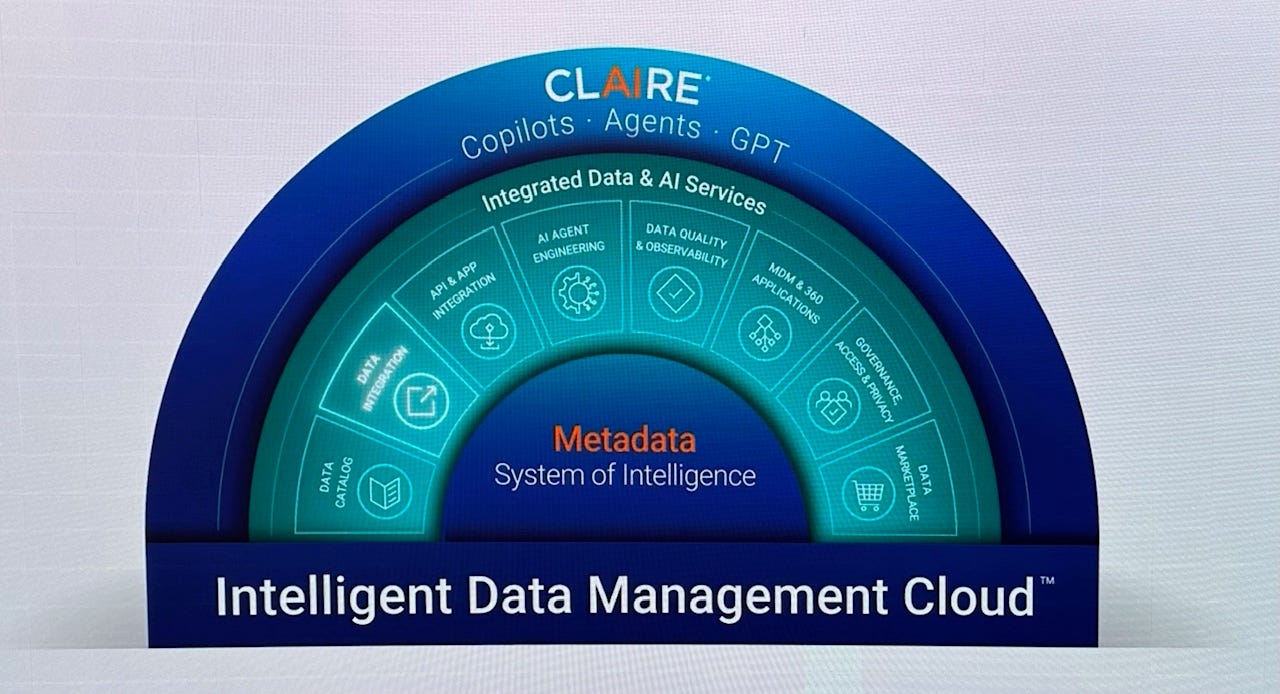

Best practices include master data management, data cataloging, observability, and governance. Informatica also introduced its metadata “system of intelligence,” which is at the core of its Intelligent Data Management Cloud (IDMC). All of which pave the way to data quality — and then to AI. “Your data is your AI differentiator,” said Walia.

A shining example of what’s working was shared by Katie Meyers, Chief Data Officer of Charles Schwab. The brokerage firm laid the foundation with a modernization strategy that included moving to Google Cloud and IDMC. Its first step in AI was a knowledge assistant that’s now available to its 10,000 customer representatives, saving hundreds of thousands of minutes per month. Next is a tool to help its financial consultants surface insights from the firm’s proprietary research.

When asked by Walia what’s next, Meyers said “moving from internal-facing use cases to customer-facing use cases.” Charles Schwab envisions using AI to establish one-to-one advisory relationships with more of its retail clients.

Like everyone else, Meyers acknowledged that working with AI may require new processes, practices, and problem solving. “We’re thinking about security and privacy in new ways,” she said, adding, “By far, the biggest challenge is data integrity.”

During a customer panel earlier in the day, Informatica CIO Graeme Thompson got to the crux of the opportunity/challenge dichotomy. On a scale of 1 to 10, Thompson asked the three data execs, how excited are you about AI? And how worried?

“Excited — I’m on the 10 level,” responded one. “And 11 out of 10, I’m worried.” The others echoed that sentiment.

That’s the Yin and Yang of data & AI. The opportunities and challenges are interconnected and interdependent. That’s has always been true in IT — but never more so than now.

"This dichotomy — good AI at risk from bad data — is where the battle is being fought in many businesses."

Great summary of the issue, John!